January 28, 2026 · 6 min read

Choosing the Right Speech-to-Text Model: These 8 Factors Matter Most

As a PhD researcher, I regularly need to process interviews and meetings and often found that popular, default transcription tools – such as the built-in Microsoft Word transcriber- offer disappointing quality. This led me to spend a lot of time thinking about speech-to-text technology. In this blog post, I’d like to share some of the key things that I've learned about choosing the right model, and why I eventually decided to build a benchmark and transcription tool that handles this complexity so that you don’t have to worry about it.

The Eight Most Important Factors When Choosing a Speech-to-Text Model

When you're comparing STT models, there's a lot to think about. Here's what I've found really makes a difference:

- Accuracy is obviously the big one. It's usually the first thing people ask about, and for good reason. Generally speaking, the more accurate a model is, the more you'll pay for it. But here's the thing—even the best models won't give you 100% accuracy. Depending on your need for an exact transcript, you’re going to need to do some editing afterwards.

- Latency matters a lot if you're doing anything time-sensitive, like live captioning or building a voice assistant. This is basically how fast the model can process your audio and give you back the transcription

- Diarization quality - that's the fancy term for how well a model can tell different speakers apart. If you're transcribing meetings or interviews with multiple people, you need this to work well. Otherwise, you'll spend forever figuring out who said what.

- Language support varies wildly. At the moment of writing, Gladia supports close to a hundred languages, Deepgram has different models with different language coverage, and Speechmatics handles fifty-five languages with high accuracy. To add to this complexity, within a language, dialects may sound very different or have specific words or phrases. Not every model performs equally well on one dialect compared to another in the same language.

- Then there's the question of verbatim versus readability. Sometimes you need every "um" and "ah" captured exactly as spoken. Other times, you want a cleaned-up version that's actually pleasant to read.

- Multilingual audio is tricky. If you're working with conversations where people switch between languages -say, French, English, and Dutch in the same meeting - you need a model that can handle that gracefully.

- Privacy and data location can be deal-breakers depending on your situation. Some models (like OpenAI’s Whisper, or Mistral’s Voxtral) you can run on your own machine, which is great for privacy. With API services, you might care whether the servers are in the US, Europe, or elsewhere.

- And finally, custom vocabulary. Being able to add specific product names, technical jargon, or proper nouns can make a huge difference in accuracy for specialized content.

The Players in the Space

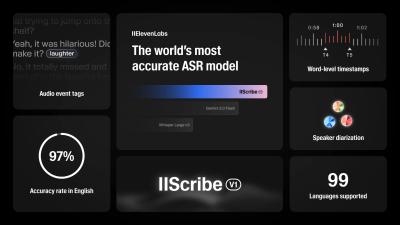

The STT landscape is pretty diverse. On the commercial side, you've got established players like Speechmatics, Deepgram, Sonix, Gladia, and Assembly, plus the big tech companies -Google and Microsoft - with their cloud-integrated solutions. ElevenLabs Scribe is another, more recent, solid option. These large commercial models tend to offer high accuracy, lots of features, and the infrastructure to scale.

On the open-source side, Whisper is probably the most well-known. The French AI company Mistral also has a great open-source model called Voxtral. The big advantage here is that you can run these on your own hardware, which is both privacy-friendly and eliminates ongoing API costs.

Why I Built Scribewave

Here's the problem I kept running into: keeping up with all these models is exhausting. New ones come out every week. Each has different strengths and weaknesses. Testing them all against your specific audio conditions - background noise, accents, audio quality - takes forever. And honestly, most people just want their audio transcribed accurately. They don't want to become STT experts.

That's why I built Scribewave. The idea is to abstract away all this complexity and just guarantee you get the most accurate transcription possible.

We continuously benchmark twelve different models - including our own and various commercial options. When new models drop (which happens very often in the current AI race), we test them and update our benchmarks automatically.

When you upload a file to Scribewave, you can easily specify your specific needs: Do you need custom vocabulary? Is this multilingual? Do you want verbatim or readable text? Based on your settings and the characteristics of your audio - things like background noise or dialect - we automatically pick the best model for that specific file. You don't have to think about whether Elevenlabs or Speechmatics or Deepgram would work better. We've already tested them all and know which one will give you the best results.

Beyond Just Transcription

But Scribewave isn't just about picking the right model. I wanted to build a complete workflow tool:

- The editor lets you correct every word, and everything stays perfectly synced with the audio. You can scroll through the transcript while the audio plays, add or remove words, make corrections, all while maintaining that sync.

- Translation is built right in. Translate your transcript into another language, and it stays synced with the original audio. You can edit the translation too.

- The AI assistant can analyze your corrected transcript and answer questions about it, which is incredibly useful for pulling insights from long conversations or meetings. It can also clean up your transcript to make it more easy to read, while keeping the option to switch back when needed.

- Export is flexible - Word documents, various formats, whatever you need to fit into your existing workflow.

People sometimes ask me why I develop a transcription service when all these big companies are active in this field. My answer is that getting a good transcript is so dependent on your specific audio conditions and preferences, that it’s very hard to achieve the best result using the same model every time. Also, you usually want to do something with the transcript – edit it, translate it, analyze it – I’m just naming a few things.

With Scribewave, the whole point is this: you shouldn't have to worry about model selection, settings, or keeping up with the latest STT developments. Upload your file, and Scribewave handles the rest. You get an accurate transcription as quickly as possible, and you can focus on actually using it rather than fighting with the technology.

About the author

Ulysse Maes

In a world where Ulysse can't out-flex The Rock or out-charm Timothée Chalamet, he triumphs as the mastermind behind Scribewave, fiercely defending his throne as the king of nerds in beautiful Antwerp, Belgium.

Related articles

Discover more articles about transcription, subtitling, and translation