April 9, 2025 · 7 min read

OpenAI launches GPT-4o-transcribe: A powerful yet limited transcription model

In a somewhat low-key announcement, OpenAI has introduced two new speech-to-text models that outshine their predecessor Whisper V3 across multiple languages. The new models—gpt-4o-transcribe and gpt-4o-mini-transcribe—contribute to OpenAI's portfolio of increasing capable audio models, though they come with important limitations that potential users should consider.

OpenAI's Quiet Advancement in Audio Technology

While OpenAI regularly generates headlines with their LLM releases and updates to their video generator Sora, their audio developments are often announced more quietly (maybe because they’re afraid too much publicity might spark concerns around copyrighted material used for training). Despite this lower profile, the company has been steadily building an impressive portfolio of audio technologies, particularly in text-to-speech (TTS) with remarkably natural-sounding results.

The latest addition to this growing audio ecosystem is their new gpt-4o-branded closed-source transcription (STT) API, which represents a substantial improvement over their previous model Whisper V3, but arrives with much less publicity than their other recent launches, in a blog post on their site.

The new offerings consist of two closed-source automatic speech-to-text models:

- gpt-4o-transcribe: The more powerful and accurate of the two models

- gpt-4o-mini-transcribe: A lighter alternative that balances performance with efficiency

These models are available through OpenAI's API at a relatively affordable price point, making accurate transcription more accessible to developers.

How Do They Compare to Whisper?

OpenAI's Whisper has been widely adopted as an open-source transcription solution, but these new models claim superior performance across most languages. However, the improvements vary significantly by language.

We see that most of the improvements are made in previously weak languages. For example, speech recognition with the gpt-4o family of Malayalam show substantially lower word error rates (WER) compared to Whisper. For languages where Whisper already performed well, there is some improvement too, but the relative gains are more modest.

This uneven improvement suggests that OpenAI has focused on addressing Whisper's weak points rather than making uniform enhancements across all languages, and seems to confirm the “law of diminishing returns”. Most languages models seem to asymptotically improve to a point where it’s almost perfect, but not completely flawless.

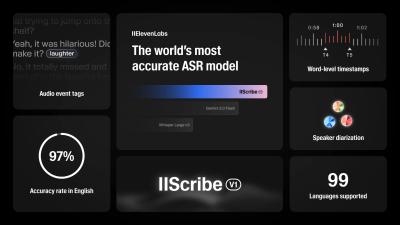

These developments fit in the growing competitiveness of the AI transcription space, with Google's Gemini API offering similar functionality, although their language model Gemini is natively multimodal. Independent benchmarks by Artificial Analysis confirm that gpt-4o-transcribe performs admirably against industry leaders, holding a combined second place alongside Speechmatics and Assembly, falling just one percentage point behind Elevenlabs Scribe.

Price Versus Performance

One of the most compelling aspects of the new models is their pricing structure: gpt-4o-mini-transcribe is priced at half the cost of OpenAI's own Whisper endpoint. Only Groq's and Fireworks' hosted versions of Whisper V3 come in at a lower price point. This competitive pricing makes it an attractive option for developers already working within the OpenAI ecosystem.

Significant Limitations to Consider

Despite impressive performance metrics, several significant limitations may prevent gpt-4o-transcribe from becoming the go-to solution for many use cases:

- 🚫 Access limitations: Currently the model can only be accessed through code, with no user-friendly interface to try it out. You can however still try it out for free via Scribewave's free online transcription tool.

- 📁 File size restrictions: File uploads to the OpenAI audio API are limited to files under 25MB, which means you cannot transcribe longer audio or video files.

- 😔 Missing features: It misses essential features like word-level timestamps or speaker recognition (diarization)

- 📪 Closed-source nature: Because the new models are – contrary to Whisper- open-source, they don’t offer customization options for specific domains or vocabularies

- 🕵️♀️ Privacy concerns: OpenAI may be using uploaded data to train their model, which raises privacy concerns – especially for European users.

While Whisper offers more customizability because of its open-source nature, OpenAI’s hosted version also suffers from the same limited set of features and small maximum file size.

Is It Right for Your Needs?

Whether gpt-4o-transcribe is the right choice depends entirely on your specific requirements:

- For developers building within the OpenAI ecosystem: gpt-4o-mini-transcribe offers excellent value for money and solid performance

- For non-technical users seeking a comprehensive solution: Full transcription suites like Scribewave provide a more accessible and feature-rich experience

Some of the strong point of Scribewave'sz transcription suite include:

- An even higher accuracy on 32 languages, according to benchmarks

- A free tier with a user-friendly interface is available for testing

- It supports uploading large audio and video files, of multiple hours in duration

- It recognizes different speakers out of the box

- Scribewave returns nicely formatted results with multiple export options, such as Word, Google Docs and various subtitle formats

Conclusion

OpenAI's new transcription models represent impressive achievements in transcription accuracy, particularly in previously underserved languages. However, their utility is constrained by practical limitations that make them unsuitable for many real-world applications.

If you're a developer already working with OpenAI's tools and need cost-effective transcription capabilities, gpt-4o-mini-transcribe offers compelling value. However, if you're looking for a complete, user-friendly transcription solution with features like speaker recognition and flexible export options, alternatives like Scribewave would be more appropriate.

As the transcription space continues to evolve, we can expect further refinements to address these limitations, but for now, users should carefully match their specific needs against the capabilities and constraints of each available solution.

About the author

Ulysse Maes

In a world where Ulysse can't out-flex The Rock or out-charm Timothée Chalamet, he triumphs as the mastermind behind Scribewave, fiercely defending his throne as the king of nerds in beautiful Antwerp, Belgium.

Related articles

Discover more articles about transcription, subtitling, and translation